Now that we have a defined the parameters of our modified mean-reverting Ornstein-Uhlenbeck process which defines our Temperature dynamics, in this tutorial we will now be looking to implement different models for our time varying volatility patterns.

We have a number of options to model temperature volatility across seasons.

- Piece-wise constant functions, volatility for each season

- Parametric Regression – Polynomial

- Local and Nonparametric Regression – Splines

- Fourier series to model the volatility of temperature

- Stochastic differential equation

Summary of our Modified OU Dynamics

We Finally have our dynamics well described and we’re convinced that the expectation of this stochastic process is equal to the longrun average of our Daily Average Temperature, DAT.

$$\large dT_t = \left[\frac{d\bar{T_t}}{dt} + 0.438 (\bar{T_t} – T_t)\right]dt + \sigma_t dW_t$$

Where our changing average of DAT \( \large \bar{T_t} \) is:

$$\large \bar{T_t} = 16.8 + (3.32e-05)t + 5.05 sin((\frac{2\pi}{365.25})t + 1.27)$$

First derivative does not need finite difference approximation here because the function is differentiable \( \large \bar{T’_t} \)

$$\large \bar{T’_t} = (3.32e-05) + 5.05 (\frac{2\pi}{365.25}) cos((\frac{2\pi}{365.25})t + 1.27)$$

Where the date 01-Jan 1859 corresponds with the first ordinal number 0.

Volatility of Temperature Process

The volatility estimator is based on the the quadratic variation of of the temperature process \( \Large T_t \)

$$\Large \hat{\sigma}_t = \sqrt{ \frac{1}{N_t} \sum^{N-1}_{i=0} (T_{i+1} – T_i)^2 }$$

\(\Large \sigma_t\) is the dynamic volatility of the Temperature process. This could be both daily as with our temperature dynamics or seasonal, for example monthly.

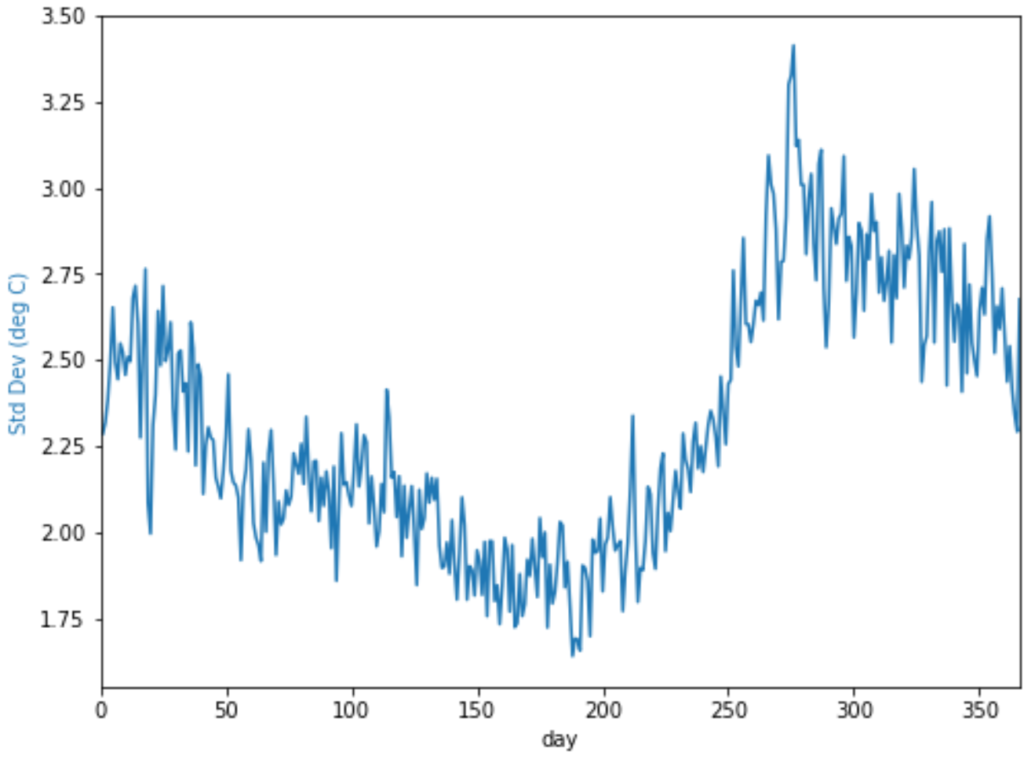

vol = temp_t.groupby(['day'])['T'].agg(['mean','std'])

vol['std'].plot(color='tab:blue', figsize=(8,6))

plt.ylabel('Std Dev (deg C)',color='tab:blue')

plt.xlim(0,366)

plt.show()

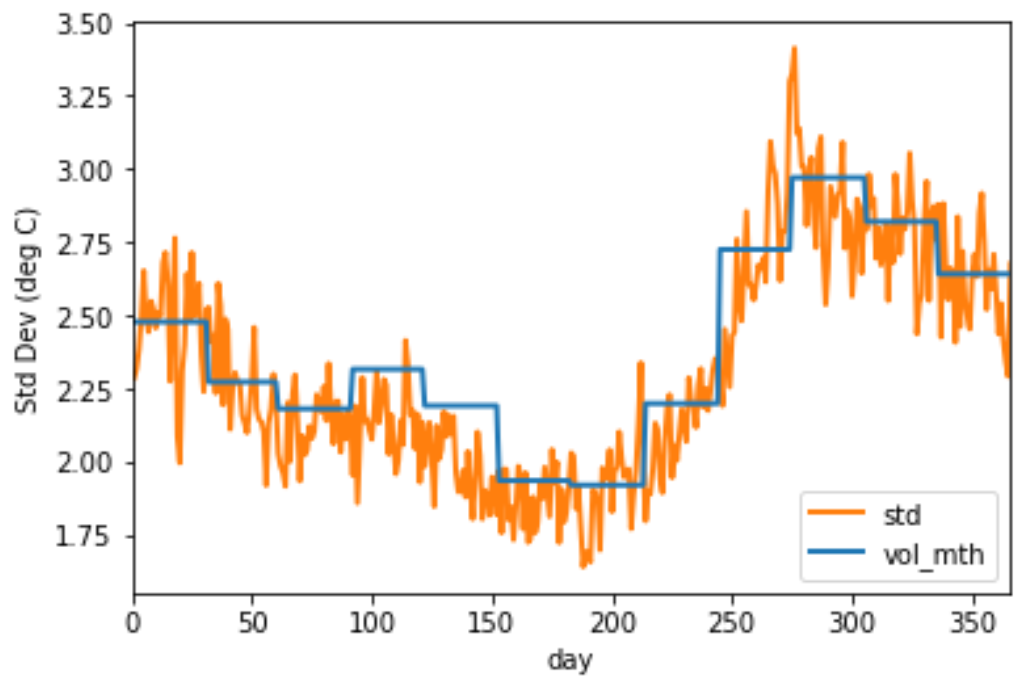

1. Piece-wise constant functions

vol_days = temp_t.groupby(['day'])['T'].agg(['mean','std'])

vol_mths = temp_t.groupby(['month'])['T'].agg(['mean','std'])

vol_days["days"] = vol_days.index

def change_month(row):

date = dt.datetime(2016, 1, 1) + dt.timedelta(row.days - 1)

return vol_mths.loc[date.month,'std']

vol_days['vol_mth'] = vol_days.apply(change_month, axis=1)

vol_days[['std', 'vol_mth']].plot(color=['tab:orange','tab:blue'],linewidth=2)

plt.ylabel('Std Dev (deg C)')

plt.xlim(0,366)

plt.legend(loc='lower right')

plt.show()

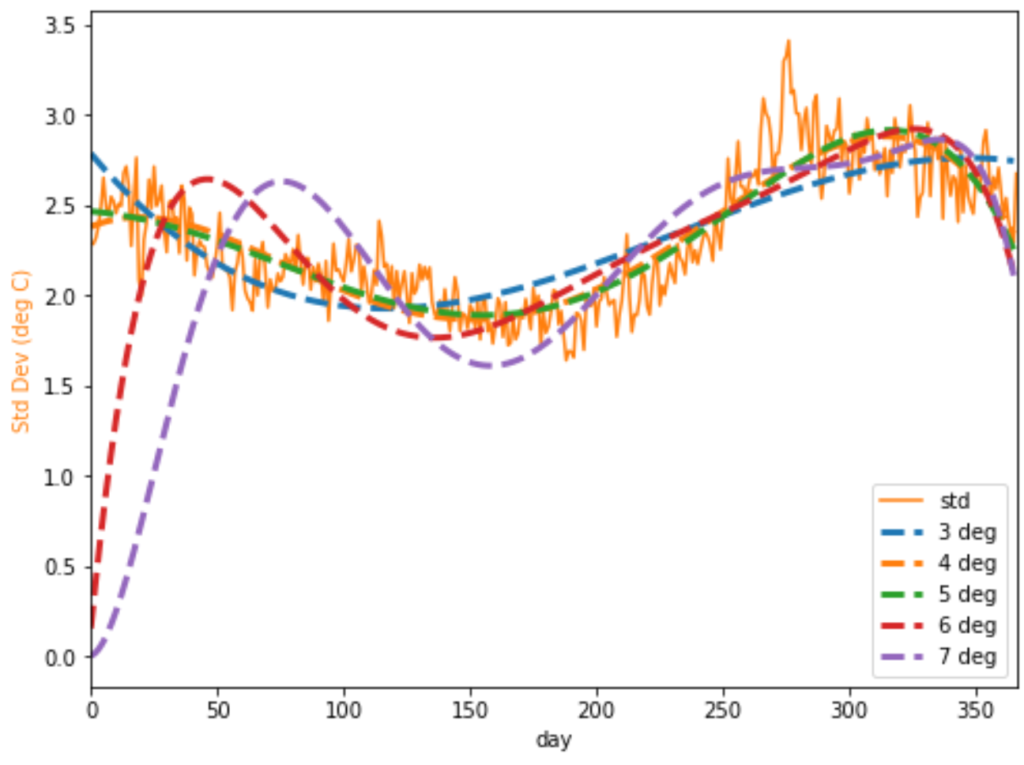

2. Parametric Regression – Polynomials

import statsmodels.api as sm

from sklearn.preprocessing import PolynomialFeatures

# Preprocess x, y combinations to handle dimensionality of Polynomial Features

x = np.array(vol['std'].index)

y = np.array(vol['std'].values)

#Create single dimension

x= x[:,np.newaxis]

y= y[:,np.newaxis]

inds = x.ravel().argsort() # Sort x values and get index

x = x.ravel()[inds].reshape(-1,1)

y = y[inds] #Sort y according to x sorted index

# initialise degrees of freedom

poly_feats3 = PolynomialFeatures(degree=3)

poly_feats4 = PolynomialFeatures(degree=4)

poly_feats5 = PolynomialFeatures(degree=5)

poly_feats6 = PolynomialFeatures(degree=6)

poly_feats7 = PolynomialFeatures(degree=7)

# Fit transform to number of polynomial features

xp3 = poly_feats3.fit_transform(x)

xp4 = poly_feats4.fit_transform(x)

xp5 = poly_feats5.fit_transform(x)

xp6 = poly_feats6.fit_transform(x)

xp7 = poly_feats7.fit_transform(x)

# Fit using ordinary least squares

p3 = sm.OLS(y, xp3).fit()

p4 = sm.OLS(y, xp4).fit()

p5 = sm.OLS(y, xp5).fit()

p6 = sm.OLS(y, xp6).fit()

p7 = sm.OLS(y, xp7).fit()

# Predict using original data and model fit

poly3 = p3.predict(xp3)

poly4 = p4.predict(xp4)

poly5 = p5.predict(xp5)

poly6 = p6.predict(xp6)

poly7 = p7.predict(xp7)

vol = temp_t.groupby(['day'])['T'].agg(['mean','std'])

vol['std'].plot(color='tab:orange', figsize=(8,6))

plt.plot(poly3, '--', linewidth=3, label='3 deg')

plt.plot(poly4, '--', linewidth=3, label='4 deg')

plt.plot(poly5, '--', linewidth=3, label='5 deg')

plt.plot(poly6, '--', linewidth=3, label='6 deg')

plt.plot(poly7, '--', linewidth=3, label='7 deg')

plt.ylabel('Std Dev (deg C)',color='tab:orange')

plt.xlim(0,366)

plt.legend(loc='lower right')

plt.show()

Choose Model by Parsimony

Using the Akaike information criterion to judge parsimony between model fit and complexity.

$$\Large AIC = 2k – 2ln(\hat{L})$$

where \(k\) is the numbers of parameters and \(\hat{L}\) is the log-likelihood

p3.aic, p4.aic, p5.aic, p6.aic, p7.aic

plt.figure(figsize=(8,6))

plt.plot(x, y, color='tab:orange', label='original')

plt.plot(x, poly5, '--', linewidth=3, label='spline fit')

plt.ylabel('Std Dev (deg C)',color='tab:orange')

plt.xlim(0,366)

plt.legend(loc='lower right')

plt.show()

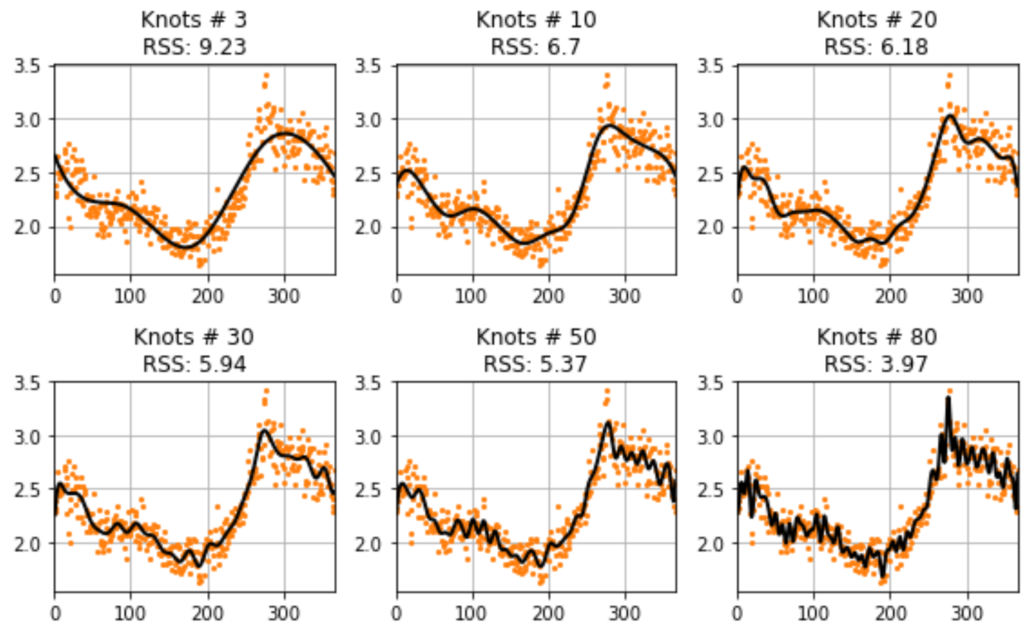

3. Local and Nonparametric Regression – Splines

Interpolation is a method of estimating unknown data points in a given range. Spline interpolation is a type of piecewise polynomial interpolation method. Spline interpolation is a useful method in smoothing the curve or surface data.

B-spline curve fitting

B-spline or basis spline is a curve approximation method, and requires the parameters such as knots, spline coefficients, and degree of a spline. To construct a smoother spline fit, we need to specify the number of knots for the target data.

Knots are joints of polynomial segments.

Steps

- Based on knots number, we’ll determine the new x data vector by using the quantile function.

- splrep function returns tuple containing the vector of:

- knots

- B-spline coefficients

- degree of the spline

- Use BSpline class to construct spline fit on x vector data.

from scipy import interpolate

x = np.array(vol['std'].index)

y = np.array(vol['std'].values)

knot_numbers = 5

x_new = np.linspace(0, 1, knot_numbers+2)[1:-1]

q_knots = np.quantile(x, x_new)

t,c,k = interpolate.splrep(x, y, t=q_knots, s=1)

yfit = interpolate.BSpline(t,c,k)(x)

plt.figure(figsize=(8,4))

plt.plot(x, y, label='Volatility')

plt.plot(x, yfit, label='Spline Fit')

plt.ylabel('Std Dev (deg C)')

plt.xlabel('Day in Year')

plt.xlim(0,366)

plt.legend(loc='lower right')

plt.show()

def spline(knots, x, y):

x_new = np.linspace(0, 1, knots+2)[1:-1]

t, c, k = interpolate.splrep(x, y, t=np.quantile(x, x_new), s=3)

yfit = interpolate.BSpline(t,c, k)(x)

return yfit

knots = [3, 10, 20, 30, 50, 80]

i = 0

fig, ax = plt.subplots(nrows=2, ncols=3, figsize=(8, 5))

for row in range(2):

for col in range(3):

ax[row][col].plot(x, y, '.',c='tab:orange', markersize=4)

yfit = spline(knots[i], x, y)

rss = np.sum( np.square(y-yfit) )

ax[row][col].plot(x, yfit, 'k', linewidth=2)

ax[row][col].set_title("Knots # "+str(knots[i])+"\nRSS: "+str(round(rss,2)), color='k')

ax[row][col].set_xlim(0,366)

ax[row][col].grid()

i=i+1

plt.tight_layout()

plt.show()

4. Fourier series to model the volatility of temperature

$$\Large \sigma_t = a_0 + \sum^I_{i=1} a_i cos(i\omega t) + \sum^J_{j=1} b_j sin(j\omega t)$$

Let’s take a look at long term volatility

std = temp_t['T'].rolling(30).std()

std.plot()

plt.plot([std.index[0], std.index[-1]], [std.mean(),std.mean()], 'k', linewidth=2)

plt.show()

from symfit import parameters, variables, sin, cos, Fit

def fourier_series(x, f, n=0):

"""

Returns a symbolic fourier series of order `n`.

:param n: Order of the fourier series.

:param x: Independent variable

:param f: Frequency of the fourier series

"""

# Make the parameter objects for all the terms

f=2*np.pi/365.25

a0, *cos_a = parameters(','.join(['a{}'.format(i) for i in range(0, n + 1)]))

sin_b = parameters(','.join(['b{}'.format(i) for i in range(1, n + 1)]))

# Construct the series

series = a0 + sum(ai * cos(i * f * x) + bi * sin(i * f * x)

for i, (ai, bi) in enumerate(zip(cos_a, sin_b), start=1))

return series

x, y = variables('x, y')

# Define frequency as yearly

w = 2*np.pi/365.25

model_dict = {y: fourier_series(x, f=w, n=3)}

print('Example of Fourier Model \n', model_dict)

if isinstance(std.index , pd.DatetimeIndex):

first_ord = std.index.map(dt.datetime.toordinal)[0]

std.index=std.index.map(dt.datetime.toordinal)

time_mask = -int(30*365.25)

# Make step function data

xdata = std.index[time_mask:] - first_ord

ydata = np.array(std[time_mask:])

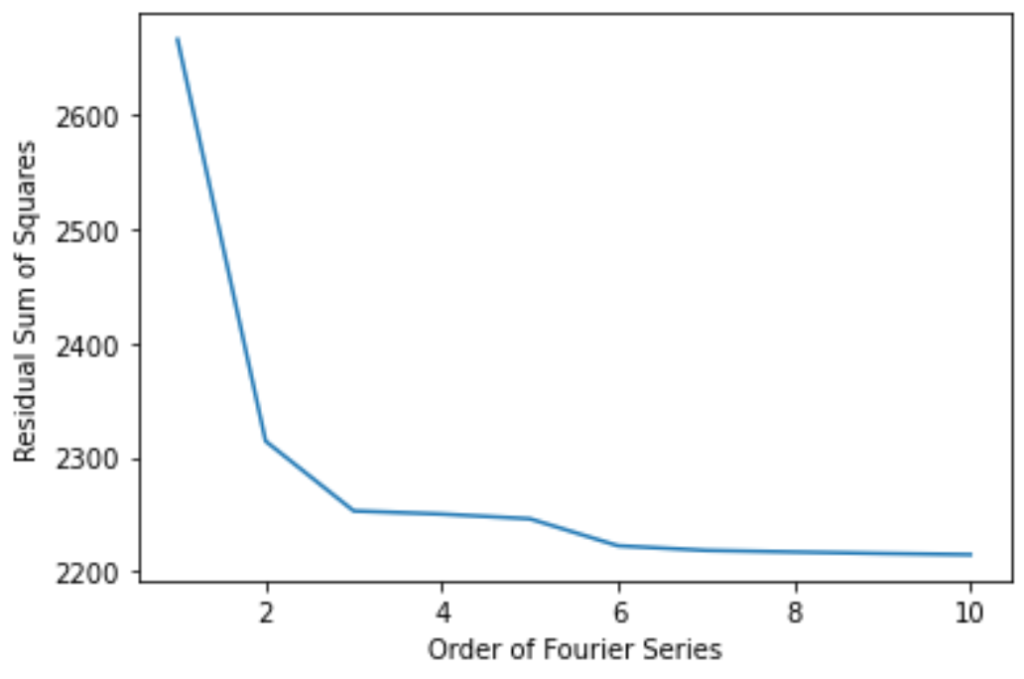

rss = []

for fourier_order in range(1,11):

model_dict = {y: fourier_series(x, f=w, n=fourier_order)}

# Define a Fit object for this model and data

fit = Fit(model_dict, x=xdata, y=ydata)

fit_result = fit.execute()

rss.append(fit_result.chi_squared)

plt.plot(range(1,11),rss)

plt.ylabel('Residual Sum of Squares')

plt.xlabel('Order of Fourier Series')

plt.show()

model_dict = {y: fourier_series(x, f=w, n=6)}

# Define a Fit object for this model and data

fit = Fit(model_dict, x=xdata, y=ydata)

fit_result = fit.execute()

# Map back to datetime from ordinal

xdata2=(xdata+first_ord).map(dt.datetime.fromordinal)

# Plot the result

plt.plot(xdata2, ydata)

plt.plot(xdata2, fit.model(x=xdata, **fit_result.params).y, 'k-', linewidth=3)

print("Fourier Series Parameters:")

for i,v in fit_result.params.items():

print(i,round(v,3))

fourier = pd.DataFrame({'date':xdata2,

'day':xdata2.dayofyear,

'volatility':ydata,

'model':fit.model(x=xdata, **fit_result.params).y})

fourier.index = fourier.date

fourier = fourier.drop(columns='date')

fourier_model = fourier.groupby(['day'])['model'].agg(['mean','std'])

vol = temp_t[time_mask:].groupby(['day'])['T'].agg(['mean','std'])

x = np.array(vol['std'].index)

y = np.array(vol['std'].values)

fourier_model['mean'].plot(color='tab:blue', figsize=(8,6), label='Fourier Series')

plt.ylabel('Std Dev (deg C)',color='tab:orange')

plt.plot(x, y, color='tab:orange', label='original')

plt.xlim(0,366)

plt.legend(loc='lower right')

plt.show()

5. Stochastic Differential Equations

$$\large dT_t = \left[\frac{d\bar{T_t}}{dt} + 0.438 (\bar{T_t} – T_t)\right]dt + \sigma_t dW_{1,t}$$

$$\large d\sigma_t = k_\sigma (\bar{\sigma_t} – \sigma_t)dt + \gamma_t dW_{2,t}$$

temp_t['day'] = temp_t.index.dayofyear

temp_t['month'] = temp_t.index.month

temp_t['year'] = temp_t.index.year

vol = temp_t.groupby(['year','month'])['T'].agg(['mean','std'])

vol = vol.reset_index()

vol['std'].plot(figsize=(8,6))

plt.plot([0, len(vol)], [vol['std'].mean(),vol['std'].mean()], 'k', linewidth=2)

plt.ylabel('Std Dev (deg C)')

plt.title('Monthly Volatility of Observed Daily Average Temperatures', color='k',)

print('Trend or long term volatility is easy: ~', round(vol['std'].mean(),3))

plt.show()

Estimating volatility of volatility process

If we use the estimator presented in Alaton et al.

The volatility estimator is based on the the quadratic variation of of the volatility process \(\Large \sigma_t\)

$$\Large \hat{\gamma_t} = \sqrt{ \frac{1}{N_t} \sum^{N-1}_{i=0} (\sigma{i+1} – \sigma_i)^2 } $$

print('Gamma is: ', round(vol['std'].std(),3))

model = AutoReg(vol['std'], lags=1, old_names=True,trend='n')

model_fit = model.fit()

coef = model_fit.params

res = model_fit.resid

print('Rate of mean reversion of volatility process is : ', coef[0])

print(model_fit.summary())

Stochastic Differential Equation Completed!

$$\large dT_t = \left[\frac{d\bar{T_t}}{dt} + 0.438 (\bar{T_t} – T_t)\right]dt + \sigma_t dW_{1,t}$$

$$\large d\sigma_t = 0.954 (2.15 – \sigma_t)dt + 0.580 dW_{2,t}$$

In order to simulate paths, we would use the Euler scheme approximation.

- For each month, \( \Large \sigma_t \) is simulated

- Temperature is simulated for each day within the month